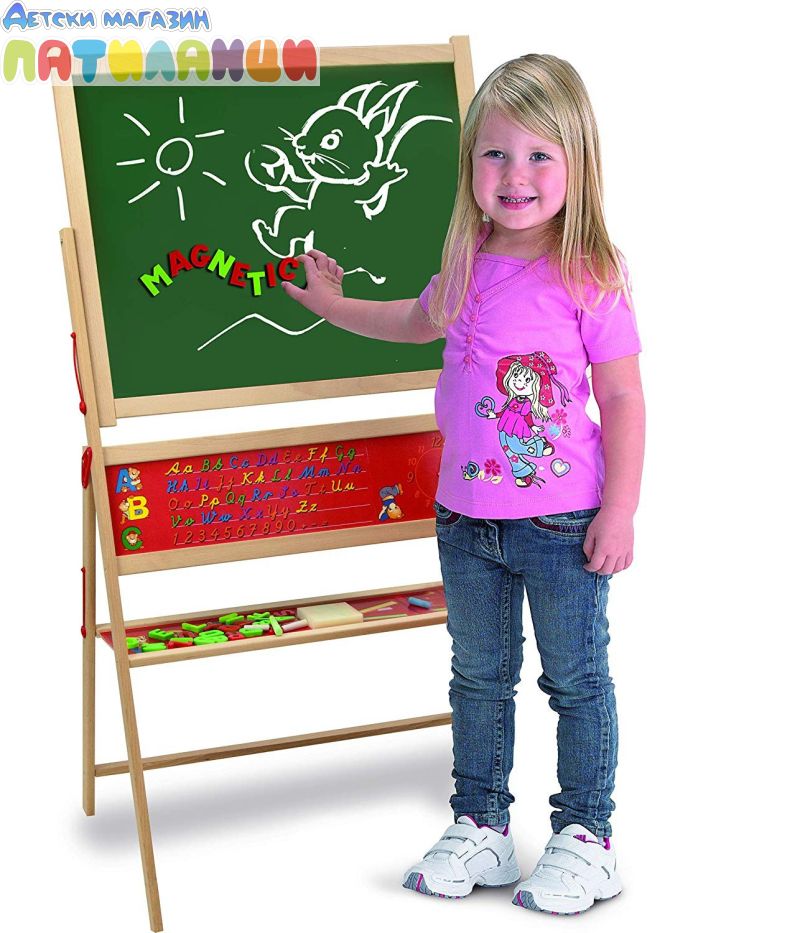

Магнитна Дъска за Рисуване и Писане 2 в 1 в Рисуване и оцветяване в гр. Ихтиман - ID23460798 — Bazar.bg

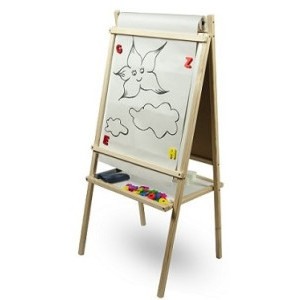

Онлайн магазин за дървени играчки Mousetoys.eu, Двустранна магнитна дъска за писане, ДЪСКИ И СМЕТАЛА, УЧА И ИГРАЯ - ДЪСКИ И СМЕТАЛА

Поръчка Рисуване писмена магнитна дъска-пъзел двойна статива детска дървена играчка бележник за скици подарък детски интелект образование развитие играчка / Играчки И Хоби - Revelnail.shop